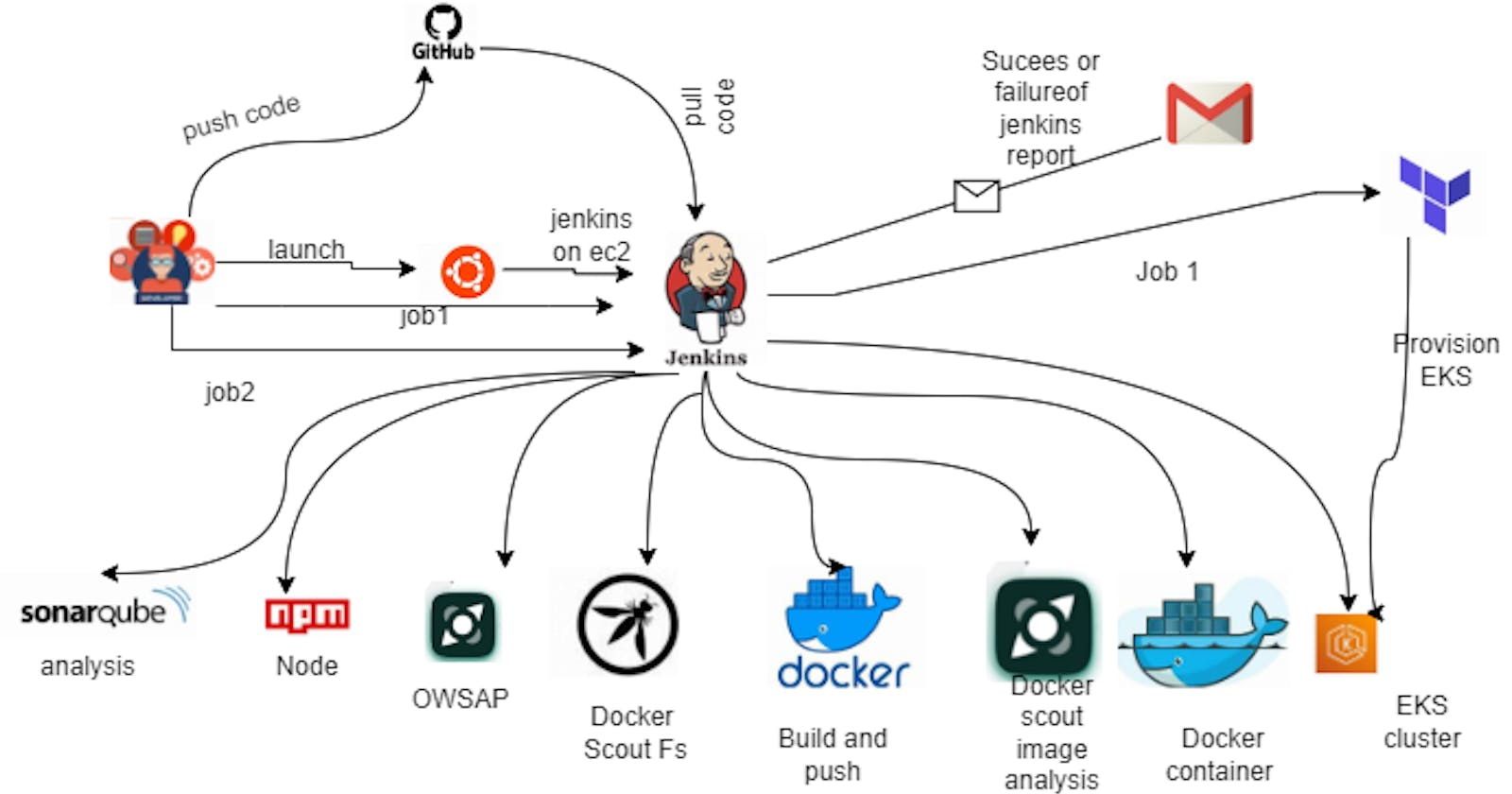

In the ever-changing landscape of software development and deployment, incorporating strong security practices into the development pipeline is now imperative. DevSecOps, which merges Development, Security, and Operations, emphasizes the significance of integrating security measures throughout the software development lifecycle. It promotes a proactive approach to tackle potential vulnerabilities and threats head-on.

This blog acts as a comprehensive manual for deploying a Hotstar clone—a well-known streaming platform—using DevSecOps principles on Amazon Web Services (AWS). The deployment process involves a range of tools and services like Docker, Jenkins, Java, SonarQube, AWS CLI, Kubectl, and Terraform to automate, secure, and streamline the deployment pipeline.

The journey starts with setting up an AWS EC2 instance configured with Ubuntu and assigning it a specific IAM role to ease the learning curve. Then, an automated script is devised to install essential tools and dependencies required for the deployment pipeline, ensuring consistency and efficiency in the setup process.

At the heart of this DevSecOps methodology is orchestration through Jenkins jobs, defining stages for tasks such as establishing an Amazon EKS cluster, deploying the Hotstar clone application, and implementing security measures at various deployment stages.

Integration of security practices is a vital component of this approach. By making use of SonarQube, OWASP, and Docker Scout, the blog demonstrates how static code analysis, security checks, and container security scans are seamlessly incorporated into the pipeline. These measures fortify the application against potential vulnerabilities, ensuring a resilient and secure deployment.

github- https://github.com/Pardeep32/app-Hotstar-Clone.git

Prerequisites

AWS account setup

Basic knowledge of AWS services like EC2, EKS, LoadBalancer, IAM

Understanding of DevSecOps principles

Familiarity with Docker, Jenkins, Java, SonarQube, AWS CLI, Kubectl, and Terraform,Docker Scout

Step-by-Step Deployment Process

Step 1: Setting up AWS EC2 Instance

Creating an EC2 instance with Ubuntu AMI, t2.large, and 30 GB storage

Assigning an IAM role with Admin access for learning purposes is common practice, but it's important to emphasize that providing least privilege access is crucial for optimal security. By granting only the permissions necessary for specific tasks or roles, organizations can minimize the potential impact of security breaches and unauthorized access. Therefore, while it may be convenient for learning purposes to grant broad admin access initially, it's essential to adhere to the principle of least privilege access in production environments and real-world scenarios.

Step 2: Installation of Required Tools on the Instance

Writing a script to automate the installation of:

Docker

Jenkins

Java

SonarQube container

AWS CLI

Kubectl

Terraform

Step 3: Jenkins Job Configuration

Creating Jenkins jobs for:

Creating an EKS cluster

Deploying the Hotstar clone application

Configuring the Jenkins job stages:

Sending files to SonarQube for static code analysis

Running

npm installImplementing OWASP for security checks

Installing and running Docker Scout for container security

Scanning files and Docker images with Docker Scout

Building and pushing Docker images

Deploying the application to the EKS cluster

Step 4: Clean-Up Process

Removing the EKS cluster

Deleting the IAM role

Terminating the Ubuntu instance

Step1-Creating an EC2 Instance:

Create an EC2 instance of t2.large type with the srorage of 30 gb.

Creating an IAM Role:

Sign in to the AWS Management Console: Sign in to the AWS Management Console using your AWS account credentials.

Navigate to IAM: In the AWS Management Console, navigate to the IAM dashboard.

Choose "Roles" from the left-hand menu: Click on "Roles" in the left-hand navigation pane to manage IAM roles.

Create Role: Click on the "Create role" button to start creating a new IAM role.

Select Trusted Entity Type: Choose the EC2 entity type that will assume the role.

Choose a Use Case: Select the use case that best describes your scenario. For EC2 instances, you can choose "EC2" as the use case.

Attach Policies: Attach policies to the role to define what permissions the EC2 instances associated with this role will have. You can choose from AWS managed policies, customer-managed policies, or inline policies.

Add Tags (Optional): Optionally, add tags to the role for better organization and management.

Review and Create: Review the role configuration and click on the "Create role" button to create the IAM role.

Attach this IAM role with the EC2 instance.

Step 2: Installation of Required Tools on the Instance

Select the EC2 instance -> connect -> ssh client-> copy the key

Go to any terminal -> got to path where downloaded key is present and paste ssh -i "hotstar.pem" ubuntu@ec2-35-182-97-36.ca-central-1.compute.amazonaws.com

Now, you are connected to your server:

Write two scripts to install Required tools

sudo su #Into root vi script1.shScript1 for installing Java,Jenkins,Docker

#!/bin/bash sudo apt update -y wget -O - https://packages.adoptium.net/artifactory/api/gpg/key/public | tee /etc/apt/keyrings/adoptium.asc echo "deb [signed-by=/etc/apt/keyrings/adoptium.asc] https://packages.adoptium.net/artifactory/deb $(awk -F= '/^VERSION_CODENAME/{print$2}' /etc/os-release) main" | tee /etc/apt/sources.list.d/adoptium.list sudo apt update -y sudo apt install temurin-17-jdk -y /usr/bin/java --version curl -fsSL https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key | sudo tee /usr/share/keyrings/jenkins-keyring.asc > /dev/null echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] https://pkg.jenkins.io/debian-stable binary/ | sudo tee /etc/apt/sources.list.d/jenkins.list > /dev/null sudo apt-get update -y sudo apt-get install jenkins -y sudo systemctl start jenkins #install docker # Add Docker's official GPG key: sudo apt-get update sudo apt-get install ca-certificates curl gnupg -y sudo install -m 0755 -d /etc/apt/keyrings curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg sudo chmod a+r /etc/apt/keyrings/docker.gpg # Add the repository to Apt sources: echo \ "deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/ubuntu \ $(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \ sudo tee /etc/apt/sources.list.d/docker.list > /dev/null sudo apt-get update sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin -y sudo usermod -aG docker ubuntu newgrp dockerRun script by providing execution permissions

sudo chmod 777 script1.sh sh script1.sh

Script 2 for Terraform,kubectl,Aws cli

vi script2.shScript 2#!/bin/bash #install terraform sudo apt install wget -y wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list sudo apt update && sudo apt install terraform #install Kubectl on Jenkins sudo apt update sudo apt install fontconfig openjdk-17-jre java -version openjdk version "17.0.8" 2023-07-18 OpenJDK Runtime Environment (build 17.0.8+7-Debian-1deb12u1) OpenJDK 64-Bit Server VM (build 17.0.8+7-Debian-1deb12u1, mixed mode, sharing) sudo wget -O /usr/share/keyrings/jenkins-keyring.asc \ https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] \ https://pkg.jenkins.io/debian-stable binary/ | sudo tee \ /etc/apt/sources.list.d/jenkins.list > /dev/null sudo apt-get update sudo apt-get install jenkins #install Aws cli curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" sudo apt-get install unzip -y unzip awscliv2.zip sudo ./aws/installGive excutable permissions to script2.sh

sudo chmod 777 script2.sh sh script2.sh

Check the status of jenkins

systemctl status jenkinsif status of jenkins shows inactive then use following commands to start jenkins:

systemctl enable jenkins systemctl start jenkins

Go to new terminal: paste the ip address of ec2 instance :8080 . jenkins is running on 8080 so make sure that it is add in security group of jenkins. Now copy this path (because passowrd is present there)

On terminal

sudo cat /var/lib/jenkins/secrets/initialAdminPassword

copy this password and insert in administartion password. Insatll plugins

Now check whether it’s installed docker, Terraform, Aws cli, Kubectl or not.

docker --version aws --version terraform --version kubectl version --client

Run the follwoing command in order to add user to docker group so that user can execute docker commands without permission denied errors.

sudo chmod 777 /var/run/docker.sock

Now Run sonarqube container on EC2 instance.

docker run -d --name sonar -p 9000:9000 sonarqube:lts-community

add 9000 in security group of ec2 instance. sonaqube is running on 9000 port.

add 9000 in security group-> inbound rule:

Select public ip address of ec2 instance 35.182.97.36:9000

Use username:admin and password:admin

Go to jenkins->admin(pardeepkaur) ->configure->password

Save and apply after insert password and login again.

Step 3: Jenkins Job Configuration

Step 3A: EKS Provision job

That is done now go to Jenkins and add a terraform plugin to provision the AWS EKS using the Pipeline Job.

Go to Jenkins dashboard –> Manage Jenkins –> Plugins

Available Plugins, Search for Terraform and install it.

On terminal find where is terraform:

Now come back to Manage Jenkins –> Tools

Add the terraform in Tools

Apply and save.

Create a S3 bucket and CHANGE YOUR S3 BUCKET NAME IN THE BACKEND.TF (change region name also)

make chnages in repo as well.

Now create a new job for the Eks provision

Create a new job:

Let’s add a pipeline

pipeline{

agent any

stages {

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/Pardeep32/app-Hotstar-Clone.git'

}

}

stage('Terraform version'){

steps{

sh 'terraform --version'

}

}

stage('Terraform init'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform init'

}

}

}

stage('Terraform validate'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform validate'

}

}

}

stage('Terraform plan'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform plan'

}

}

}

stage('Terraform apply/destroy'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform ${action} --auto-approve'

}

}

}

}

}

let’s apply and save and Build with parameters and select action as apply

Check in Your Aws console whether it created EKS or not.

EC2 instance of t2.medium

Terraform state file is saved on remote backend.

Step 3B: Hotstar job

Plugins installation & setup (Java, Sonar, Nodejs, owasp, Docker)

Go to Jenkins dashboard

Manage Jenkins –> Plugins –> Available Plugins

Search for the Below Plugins

Eclipse Temurin installer

Sonarqube Scanner

NodeJs

Owasp Dependency-Check

Docker

Docker Commons

Docker Pipeline

Docker API

Docker-build-step

Configure plugins in Global Tool Configuration

Goto Manage Jenkins → Tools → Install JDK(17) and NodeJs(16)→ Click on Apply and Save

Create a new item :

Configure Sonar Server in Manage Jenkins

Grab the Public IP Address of your EC2 Instance, Sonarqube works on Port 9000, so <Public IP>:9000. Goto your Sonarqube Server. Click on Administration → Security → Users → Click on Tokens and Update Token → Give it a name → and click on Generate Token

copy Token

Goto Jenkins Dashboard → Manage Jenkins → Credentials → Add Secret Text. It should look like this

Tell jenkins about sonarqube that on this ip adddress sonar is running. Use this token jenkins is able to access sonarqube.

dashboard->Manage jenkins->system (add name of system as per pipeline, ip address where sonarqubeis running and token will allow jenkins to access sonar)

Click on Apply and Save.

In the Sonarqube Dashboard add a quality gate also.

Administration–> Configuration–>Webhooks

Add docker hub credentials in jenkins->manage jenkins->credentials->system->Global credential. So that jenkins can access docker hub. (id and description should be docker)

install docker scout on Ec2 instance:

curl -sSfL https://raw.githubusercontent.com/docker/scout-cli/main/install.sh | sh -s -- -b /usr/local/bin

Add this to Pipeline in Hotstar

pipeline{

agent any

tools{

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME=tool 'sonar-scanner'

}

stages {

stage('clean workspace'){

steps{

cleanWs()

}

}

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/Pardeep32/app-Hotstar-Clone.git'

}

}

stage("Sonarqube Analysis "){

steps{

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Hotstar \

-Dsonar.projectKey=Hotstar'''

}

}

}

stage("quality gate"){

steps {

script {

waitForQualityGate abortPipeline: false, credentialsId: 'Sonar-token'

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('Docker Scout FS') {

steps {

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh 'docker-scout quickview fs://.'

sh 'docker-scout cves fs://.'

}

}

}

}

stage("Docker Build & Push"){

steps{

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh "docker build -t hotstar ."

sh "docker tag hotstar pardeepkaur/hotstar:latest "

sh "docker push pardeepkaur/hotstar:latest"

}

}

}

}

stage('Docker Scout Image') {

steps {

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh 'docker-scout quickview pardeepkaur/hotstar:latest'

sh 'docker-scout cves pardeepkaur/hotstar:latest'

sh 'docker-scout recommendations pardeepkaur/hotstar:latest'

}

}

}

}

stage("deploy_docker"){

steps{

sh "docker run -d --name hotstar -p 3000:3000 pardeepkaur/hotstar:latest"

}

}

}

}

Click on Apply and save.

Build now.

Stage view.

Sonarqube Analysis

Passed Sonarqube quality gate.

dependency check analysis.

Docker scout analysis

To check number of conatiner runningon Ec2 instance

docker ps

There are two conatiners running, docker conatiner of hotstar and soanrqube conatiner.

Go to jenkins instance terminal and enter the below command in order to update config file.

aws eks update-kubeconfig --name EKS_CLOUD --region ca-central-1

Now Give this command in CLI

cat /root/.kube/config

Copy it and save it in documents or another folder save it as secret-k8s.txt on local (desktop)

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURCVENDQWUyZ0F3SUJBZ0lJRWw2M1Z2Wk9MbDB3RFFZSktvWklodmNOQVFFTEJRQXdGVEVUTUJFR0ExVUUKQXhNS2EzVmlaWEp1WlhSbGN6QWVGdzB5TkRBek1USXhPREUyTURGYUZ3MHpOREF6TVRBeE9ESXhNREZhTUJVeApFekFSQmdOVkJBTVRDbXQxWW1WeWJtVjBaWE13Z2dFaU1BMEdDU3FHU0liM0RRRUJBUVVBQTRJQkR3QXdnZ0VLCkFvSUJBUURuKzZnN29BQ2d3SnJiVEVJQ2dyYXhFQ28wSGd6ZGRzUTMrN1lDTUxlYXBPSEN3Y21meVhtdytpRi8KUUlkUVFtUGV4c1hrN0YyS3hkeFhoSzRtTU04M2s0bU95MlM0WHJGeXpYeHd6b2hxSGcrOFJyL01xdllvWktTSApYaWp4QTlPVDdDMW55V2dGVm0zcHNxNHhSRVdTVzNtSzlUZ0g5bithNlhjVHNYbkRZSlFJbjZ3WTZKR2RVbi9hCm1MWXUwdjU4S0FlY2JaQW84VEVMYUJqZ25SVHJTRDk4cG9JTHdWVlRBVFZnNnYzWnBiK2RlbmlNYzJZeDJ4bWkKWHN4d3FOc3hyUFhVeTVwNEdoTFJGVzMzYVZwY3FmaHdQeHB4UmZEZ040KzF6YW9xdFdMc0E4dDhCODNoNFAydApyMDFvZlZBekxhbExHbGNLSlQwRUJ3N01pbWdUQWdNQkFBR2pXVEJYTUE0R0ExVWREd0VCL3dRRUF3SUNwREFQCkJnTlZIUk1CQWY4RUJUQURBUUgvTUIwR0ExVWREZ1FXQkJRNWFmSU9vT2NsV0puOGthdXYyN1NISWwzZEVUQVYKQmdOVkhSRUVEakFNZ2dwcmRXSmxjbTVsZEdWek1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQ0lKeDBEcTNLTQp6dGFHVkJxbk01MmxqWVlFK0Y4eTQxcUV0ZDhyLzJRd1pFMDQrQS9DOVdTb1YrVXlNbE8veDlzOWZPNFhaemJECmVldmlSLzJTYW43QThyUm1NU1RtZ1dtM3lUVnFOZUZpUnQzaEc0TjZRdHExUlBudG9GcThrbmtQRng0L3FNSFgKa3JvcEtnTzl4aFl3MFZSbGdFN0UwZjNjaHNGRW5ISzBVam1iR1RtRkNyemh5R0RmSEVDUDNVRVZYZnkyN2ZjUgpjWkNKWEFlN05YNHZXdS9Ga3VRUkk3R3QzaUVtdVJXOUtXL1kweHdXN2xDVnBrWGMza0phN05ZUzVsamprWk5uCjhoMkhEaXpvSU9aQisvMkt1MU1HcDJPVVJmanRRWEZUL1VvZk9jYldnOW83K2M1aksrQlhoZ3FJR2pOUTY5QTIKVnB2bk93U21ISkRYCi0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

server: https://5A0A1F4D004A601558FEE65B5F7CF0F0.gr7.ca-central-1.eks.amazonaws.com

name: arn:aws:eks:ca-central-1:654654392783:cluster/EKS_CLOUD

contexts:

- context:

cluster: arn:aws:eks:ca-central-1:654654392783:cluster/EKS_CLOUD

user: arn:aws:eks:ca-central-1:654654392783:cluster/EKS_CLOUD

name: arn:aws:eks:ca-central-1:654654392783:cluster/EKS_CLOUD

current-context: arn:aws:eks:ca-central-1:654654392783:cluster/EKS_CLOUD

kind: Config

preferences: {}

users:

- name: arn:aws:eks:ca-central-1:654654392783:cluster/EKS_CLOUD

user:

exec:

apiVersion: client.authentication.k8s.io/v1beta1

args:

- --region

- ca-central-1

- eks

- get-token

- --cluster-name

- EKS_CLOUD

- --output

- json

command: aws

Run kubectl commands to check working node which are up and running.

kubectl get node

Install Kubernetes Plugin, Once it’s installed on jenkins successfully

After installing kubernetive plugins successfully, go to Mangae jenkins-> Credentials -> system -> Global credentials-> upload secret.k8s.txt file from local to jenkins.

final step to deploy on the Kubernetes cluster. so add this stage to jenkins's Hotstar pipeline.

stage('Deploy to kubernets'){

steps{

script{

dir('K8S') {

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'k8s', namespace: '', restrictKubeConfigAccess: false, serverUrl: '') {

sh 'kubectl apply -f deployment.yml'

sh 'kubectl apply -f service.yml'

}

}

}

}

}

Again run the pipeline:

If you're working with Kubernetes and you want to create a Service of type LoadBalancer, you would define it in a YAML file like the following:

This target instance is Cluster's instance.(make sure that the security group of LB is allowed in inbound rule of cluster's security group). Copy the ip address of LB and paste in new browser.

The following security group is of Cluster's instance's Security group. add LB's Security group (means instance allow LB to send traffic to it.)

Now , i change the configuration of EKS cluster where i add desired cluster=2. It will create cluster with 2 instances of t2.medium .

Again run the Hotstar pipeline.

Now, LB has 2 targets.

To configure email notifications in Jenkins, you need to set up the Email Notification section in the Jenkins system configuration. Here's a step-by-step guide to adding email notification support in Jenkins:

Now download email extension plugin:

Go to your gmail account: make sure that 2 step verification is on.

Security-> app passowords

Create your new app password with unique name.

generate a new password.

Add these credentials in Mange jenkins -> credentials -> system -> Global credentials: provide gmail id and password that is recently generated, id must be mail.

Configure Jenkins SMTP Server:

Navigate to your Jenkins dashboard and click on "Manage Jenkins" in the sidebar.

Select "Configure System".

Scroll down to the "E-mail Notification" section.

Enter the SMTP server details provided by your email provider. This includes the SMTP server host, port, and credentials (username and password).

You can also configure advanced settings such as SSL/TLS encryption and response timeout.

Test Email Configuration:

Scroll down to the bottom of the configuration page and click on the "Test configuration by sending test e-mail" button.

Enter an email address to which you want to send a test email.

Click on "Test configuration". If the configuration is correct, you should receive a test email.

When pipeline will be success or failur email will be send to your email address.

pipeline{

agent any

tools{

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME=tool 'sonar-scanner'

}

stages {

stage('clean workspace'){

steps{

cleanWs()

}

}

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/Pardeep32/app-Hotstar-Clone.git'

}

}

stage("Sonarqube Analysis "){

steps{

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Hotstar \

-Dsonar.projectKey=Hotstar'''

}

}

}

stage("quality gate"){

steps {

script {

waitForQualityGate abortPipeline: false, credentialsId: 'Sonar-token'

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('Docker Scout FS') {

steps {

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh 'docker-scout quickview fs://.'

sh 'docker-scout cves fs://.'

}

}

}

}

stage("Docker Build & Push"){

steps{

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh "docker build -t hotstar ."

sh "docker tag hotstar pardeepkaur/hotstar:latest "

sh "docker push pardeepkaur/hotstar:latest"

}

}

}

}

stage('Docker Scout Image') {

steps {

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh 'docker-scout quickview pardeepkaur/hotstar:latest'

sh 'docker-scout cves pardeepkaur/hotstar:latest'

sh 'docker-scout recommendations pardeepkaur/hotstar:latest'

}

}

}

}

stage("deploy_docker"){

steps{

sh "docker run -d --name hotstar -p 3000:3000 pardeepkaur/hotstar:latest"

}

}

stage('Deploy to kubernets'){

steps{

script{

dir('K8S') {

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'k8s', namespace: '', restrictKubeConfigAccess: false, serverUrl: '') {

sh 'kubectl apply -f deployment.yml'

sh 'kubectl apply -f service.yml'

}

}

}

}

}

}

post {

always {

emailext attachLog: true,

subject: "'${currentBuild.result}'",

body: "Project: ${env.JOB_NAME}<br/>" +

"Build Number: ${env.BUILD_NUMBER}<br/>" +

"URL: ${env.BUILD_URL}<br/>",

to: 'deepnabha20@gmail.com'

}

}

}

Build now:

To modify the Jenkins job to save the output of Docker Scout in different text files.

pipeline{

agent any

tools{

jdk 'jdk17'

nodejs 'node16'

}

environment {

SCANNER_HOME=tool 'sonar-scanner'

}

stages {

stage('clean workspace'){

steps{

cleanWs()

}

}

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/Pardeep32/app-Hotstar-Clone.git'

}

}

stage("Sonarqube Analysis "){

steps{

withSonarQubeEnv('sonar-server') {

sh ''' $SCANNER_HOME/bin/sonar-scanner -Dsonar.projectName=Hotstar \

-Dsonar.projectKey=Hotstar'''

}

}

}

stage("quality gate"){

steps {

script {

waitForQualityGate abortPipeline: false, credentialsId: 'Sonar-token'

}

}

}

stage('Install Dependencies') {

steps {

sh "npm install"

}

}

stage('OWASP FS SCAN') {

steps {

dependencyCheck additionalArguments: '--scan ./ --disableYarnAudit --disableNodeAudit', odcInstallation: 'DP-Check'

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

}

}

stage('Docker Scout FS') {

steps {

script {

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

sh 'docker-scout quickview fs://. > docker-scoutquickview11111.txt'

sh 'docker-scout cves fs://. > docker-scoutcves22222.txt'

}

}

}

}

stage("Docker Build & Push"){

steps{

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker'){

sh "docker build -t hotstar ."

sh "docker tag hotstar pardeepkaur/hotstar:latest "

sh "docker push pardeepkaur/hotstar:latest"

}

}

}

}

stage('Docker Scout Image') {

steps {

script {

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

sh "docker-scout quickview pardeepkaur/hotstar:latest > docker-scoutquickview.txt"

sh "docker-scout cves pardeepkaur/hotstar:latest > docker-scoutcves.txt"

sh "docker-scout recommendations pardeepkaur/hotstar:latest > docker-scoutrecommendations.txt"

}

}

}

}

stage("deploy_docker"){

steps{

sh "docker run -d --name hotstar -p 3000:3000 pardeepkaur/hotstar:latest"

}

}

stage('Deploy to kubernets'){

steps{

script{

dir('K8S') {

withKubeConfig(caCertificate: '', clusterName: '', contextName: '', credentialsId: 'k8s', namespace: '', restrictKubeConfigAccess: false, serverUrl: '') {

sh 'kubectl apply -f deployment.yml'

sh 'kubectl apply -f service.yml'

}

}

}

}

}

post {

always {

emailext attachLog: true,

subject: "'${currentBuild.result}'",

body: "Project: ${env.JOB_NAME}<br/>" +

"Build Number: ${env.BUILD_NUMBER}<br/>" +

"URL: ${env.BUILD_URL}<br/>",

to: 'deepnabha20@gmail.com',

attachmentsPattern: 'docker-scoutcves.txt, docker-scoutquickview.txt, docker-scoutrecommendations.txt, docker-scoutcves22222.txt, docker-scoutquickview11111.txt'

}

}

}

}

Copy the loadbalancer address and paste in new browser.

In the end destroy the cluster with autoaction destroy and deploy pipeline. it will delete the eks cluster.

Then delete the Ec2 instance of t2.large type.

Congratulations on successfully deploying your Hotstar clone using DevSecOps practices on AWS! This journey has underscored the importance of seamlessly integrating security measures into your deployment pipeline. By doing so, you've not only enhanced efficiency but also established a strong defense against potential threats.

Stay Connected: We hope this guide has been insightful and valuable for your learning journey. Don’t hesitate to reach out with questions, feedback, or requests for further topics. Follow me on

hashnode: https://hashnode.com/pardeep kaur

linkedin: https://www.linkedin.com/in/pardeep-kaur-kp3244/

tech tutorials, guides, and updates.

Thank you for embarking on this DevSecOps journey with us. Keep innovating, securing, and deploying your applications with confidence!