Designed and deployed a scalable two-tier application on AWS EC2 using Docker Compose, with Amazon RDS for MySQL

Create an IAM role :

Sign in to the AWS Management Console: Go to the AWS Management Console, and sign in with your AWS account.

Open the IAM Console: Once you're logged in, navigate to the "Services" menu, then select "IAM" under the "Security, Identity, & Compliance" section.

Create a New Role: In the IAM console, select "Roles" from the left-hand navigation pane, and then click the "Create role" button.

Select the Type of Trusted Entity:

- Choose the type of trusted entity for your role. This depends on the use case. For example, if you're creating a role for an AWS service like EC2, choose "AWS service." If you're creating a role for a different AWS account, choose "Another AWS account." For this example, I'll choose "AWS service."

Choose a Use Case:

- Depending on your use case, you'll choose an appropriate use case. For example, if you're creating a role for an EC2 instance, select "EC2." AWS provides predefined policies for common use cases. For example, for an EC2 instance, you can choose "Amazon EC2" to grant standard permissions. If you have specific requirements, you can create a custom policy and attach it to the role.

Attach Permissions:

- In the next step, you'll attach permissions to the role. You can either select an existing policy or attach a custom policy. This defines what actions the role is allowed to perform.

Review:

- Review the role details, and give it a meaningful name and description.

Create the Role:

- Once you've reviewed and confirmed the details, click "Create role."

That's it! You've now created an IAM role. After creating the role, you can associate it with the resources that need to assume the role, such as EC2 instances, Lambda functions, or other AWS services. Be sure to follow the principle of least privilege when assigning permissions to roles, granting only the permissions necessary for the intended tasks.

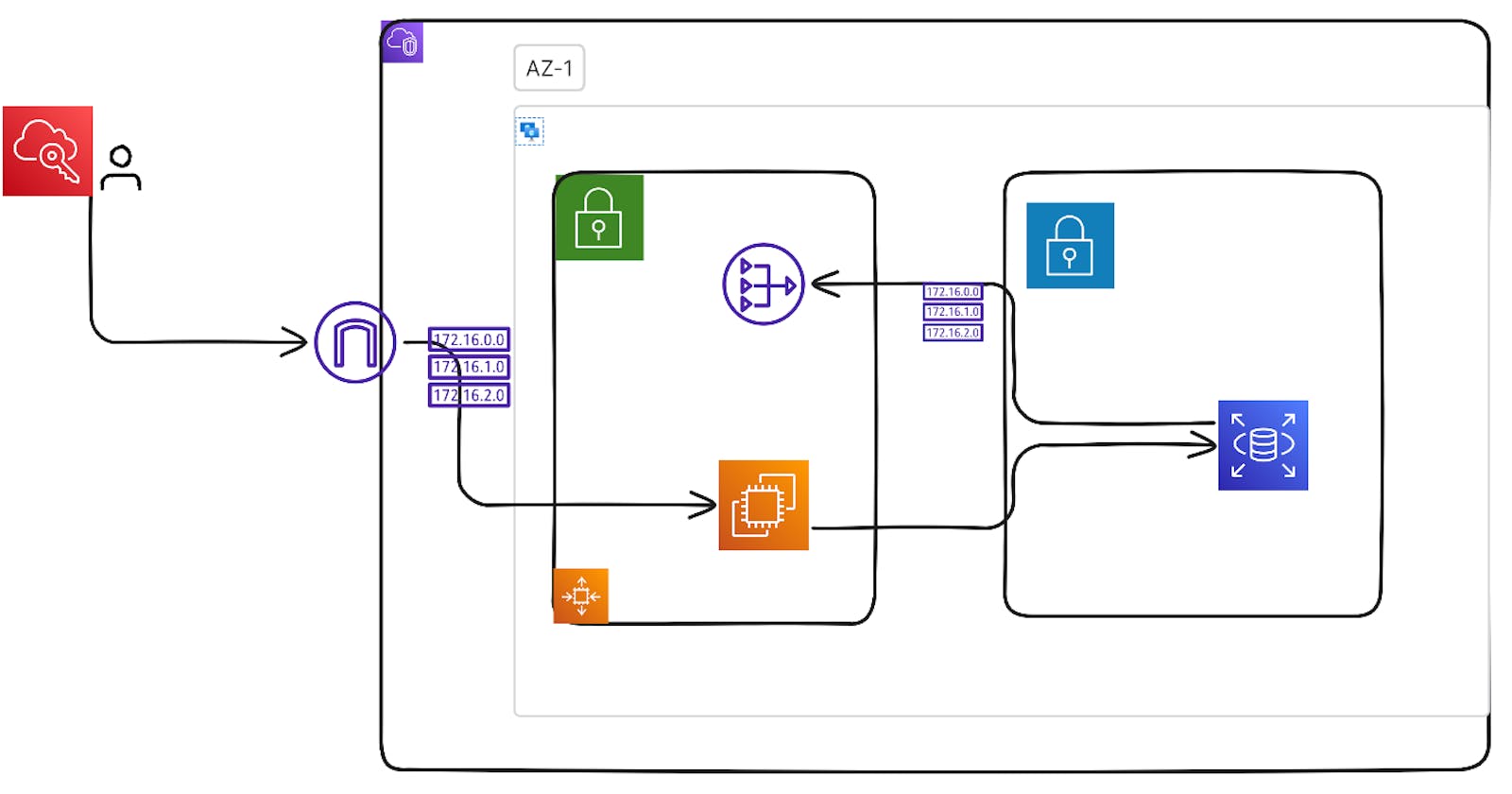

Designed and Configured AWS VPC Infrastructure for Secure Application Deployment

Created a Virtual Private Cloud (VPC) named "my-vpc" with a CIDR block of 10.0.0.0/16 to isolate and manage the network environment effectively.

Established two subnets within the VPC: a public subnet having CIDR 10.0.0.0/24 and a private subnet having CIDR 10.0.1.0/24, ensuring proper segmentation of resources for enhanced security.

Configured a route table associated with the public subnet, enabling routing of traffic to the internet via an Internet Gateway to support outward connectivity.

Set up a Network Address Translation (NAT) Gateway in the public subnet to facilitate private subnet resources' access to the internet while maintaining security controls.

Launched an Amazon Elastic Compute Cloud (EC2) instance in the public subnet, serving as a web server or application gateway, to handle external traffic efficiently.

Deployed an Amazon RDS (Relational Database Service) instance in the private subnet to securely manage and store the application's database, isolated from direct internet access.

This infrastructure design ensures that the application is accessible from the internet while maintaining the security of sensitive data by placing the database in a private subnet, allowing the EC2 instance in the public subnet to serve as a secure access point for external traffic.

when rds instance is created trffic ies in 172.31.0.0/16 will go to local.

Configure public route table: 0.0.0.0/0 means any traffic will come through internet gateway and any traffic inside VPC will go to local.

Configure private route table: 0.0.0.0/0 means any traffic will go to NAT gateway and any traffic inside VPC will go to local.

EC2 Instance Provisioning:

Deployed an Amazon Elastic Compute Cloud (EC2) instance named "docker-flask-instance" within the public subnet.

Installed Docker on the EC2 instance, preparing it for containerized application deployment.

now connect to ec2 instance through ssh :

Application Deployment and Containerization:

Cloned the application code onto the EC2 instance and crafted a Dockerfile for containerization.

Utilized Docker Compose to define and manage the "backend" service, incorporating environment variables for secure connection to an Amazon RDS instance.

Employed Docker volumes for data persistence, ensuring data resilience across container restarts.

commands:

sudo apt update

sudo apt install docker.io

$USER

sudo usermod -aG docker $USER

sudo reboot

or another way to add user to docker so that user can get docker permission:

sudo apt update

sudo apt install docker.io

whoami

sudo chown $USER /var/lib/docker.sock

now clone the code on ec2:

create a docker file: vim Dockerfile

now install docker-compose :

sudo apt install docker-compose

Accessing and Interacting with the Application:

Accessed the application via the EC2 instance's public IP address and port 5000, enabling data entry.

Installed the MySQL client on the EC2 instance, facilitating interaction with the database.craete a volume where you can persist your data. if container is crashed or down but still data is persist.

now wite docker-compose.yml file.

vim docker-compose.yml

version: '3'

services:

backend:

build:

context: .

ports:

- "5000:5000"

environment:

MYSQL_HOST: database-instance.c5wcc3sarvso.ca-central-1.rds.amazonaws.com

MYSQL_USER: admin

MYSQL_PASSWORD: pardeep17

MYSQL_DB: db1

volumes:

- falsk-app:/app/volumes # Use the "falsk-app" volume as a bind mount

volumes:

falsk-app: # Define the "falsk-app" volume

This code appears to be a Docker Compose configuration file for defining a service named "backend." It outlines the settings for building and running a containerized application. Here's an explanation of the key components:

Version: The version of Docker Compose file format being used. In this case, it's using version 3.

Services: This section defines the services or containers that make up your application.

backend: This is the name of the service being defined.

Build: It specifies how to build the Docker image for the "backend" service. The

context: .indicates that the build context is the current directory where the Docker Compose file is located. In other words, it will use the files in the current directory to build the Docker image.Ports: It maps port 5000 on the host machine to port 5000 in the container, allowing external access to the application running in the container.

Environment: These environment variables are set within the container, making them accessible to the application running inside. They are typically used for configuration and connecting to other services. In this case, it appears to be configuring a connection to a MySQL database hosted on Amazon RDS. The variables include the host (

MYSQL_HOST), user (MYSQL_USER), password (MYSQL_PASSWORD), and database name (MYSQL_DB).Volumes: This section defines a volume named "falsk-app." Volumes are a way to persist data outside of the container, and in this case, it seems to be used as a bind mount. It's mounted at

/app/volumesin the container. This can be helpful for sharing data between the host and the container or between containers.

Volumes: Here, the "falsk-app" volume is defined. It's used to provide a location for persisting data associated with the "backend" service.

Access the AWS Management Console, navigate to the EC2 instance section, and copy the instance's IP address. Then, in a new browser window, enter the public IP address of the EC2 instance followed by ":5000" in the URL bar. This will enable you to access the application. Proceed to input or manipulate data as needed.

To execute MySQL commands on the EC2 instance, install the MySQL client.

Data persistence in MySQL remains intact even after halting and then restarting the Docker Compose due to the utilization of volumes.

This orchestrated AWS deployment and Docker-based containerization strategy ensures optimal application performance, data security, and persistence, along with a clear demarcation between the public and private network segments.

Thank you for taking the time to read my article. I'm excited about the prospect of working with talented recruiters. If you're interested in exploring potential opportunities, please reach out to me. I'm ready to discuss how I can contribute to your team's success. Let's make great things happen together.

email id: pardeep17.kp@gmail.com