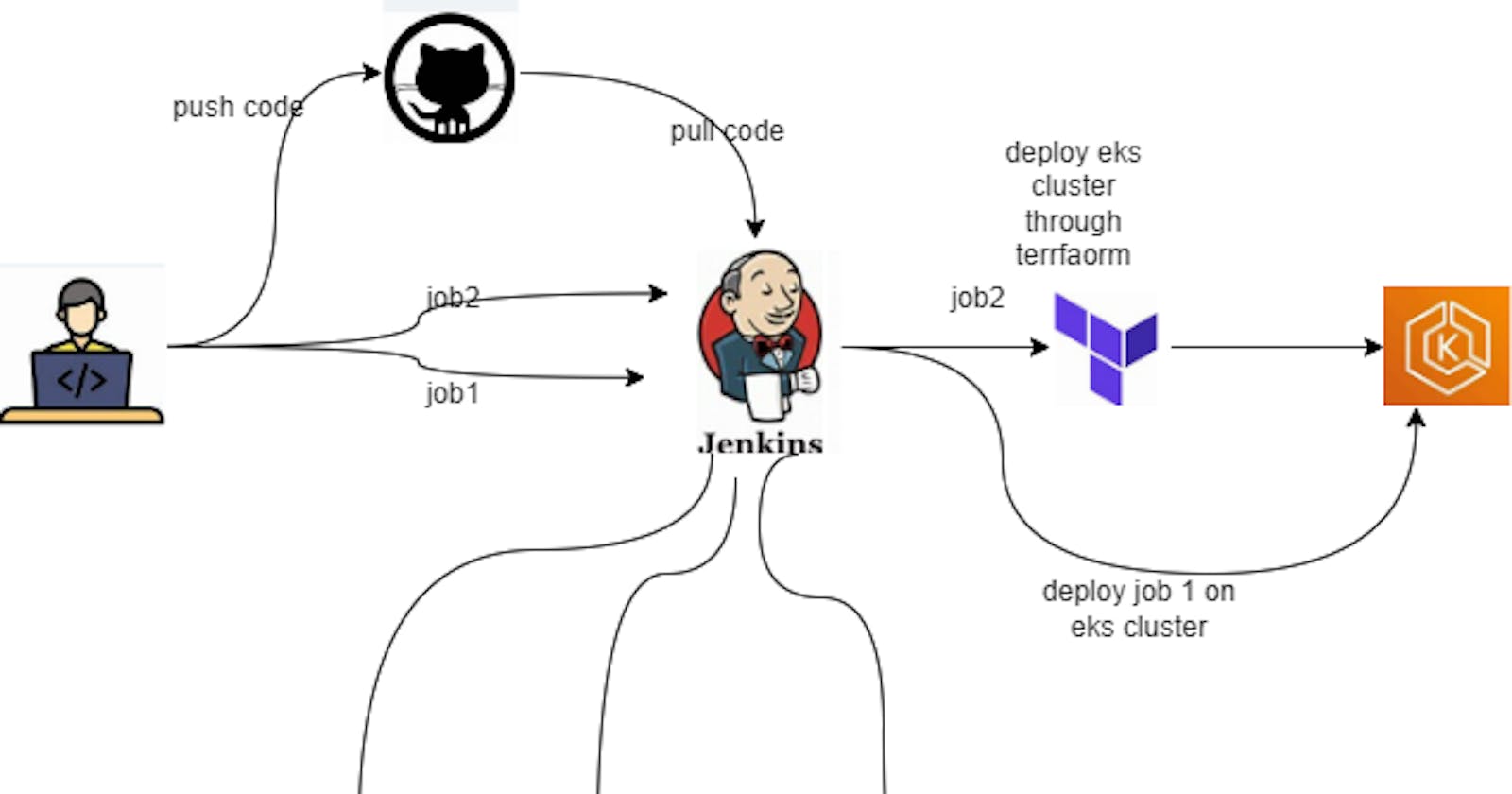

In this project, we aim to deploy an E-commerce website on an Amazon Elastic Kubernetes Service (EKS) cluster using Jenkins. The EKS cluster infrastructure is provisioned using Terraform, ensuring efficient management and scalability of the Kubernetes environment. Here's an overview of the project components and workflow:

Source Code Management (SCM):

- The project's source code is hosted on GitHub, providing version control and collaboration features.

Continuous Integration (CI) Pipeline:

- Jenkins orchestrates the CI/CD pipeline, automating the build, test, and deployment processes.

Static Code Analysis:

- SonarQube is integrated into the pipeline for static code analysis. It provides insights into code quality, security vulnerabilities, and maintainability.

Build Automation:

- Maven is utilized for build automation, handling the compilation, packaging, and dependency management of the Java-based E-commerce application.

Security Scanning:

- OWASP Dependency-Check is integrated into the pipeline to scan for security vulnerabilities within project dependencies. This ensures that the application is free from common security flaws.

Deployment to Amazon EKS:

- The final stage of the pipeline involves deploying the E-commerce application to the Amazon EKS cluster. Kubernetes manifests define the deployment and service configurations required to run the application on the cluster.

Infrastructure as Code (IaC):

- Terraform is employed to provision and manage the underlying infrastructure, including the EKS cluster. This approach ensures consistency, repeatability, and versioning of the infrastructure configuration.

Containerization:

- Docker is utilized for containerizing the E-commerce application, enabling consistent deployment across various environments.

Dynamic Scaling and High Availability:

- Leveraging Kubernetes' capabilities, the EKS cluster provides dynamic scaling and high availability for the deployed application, ensuring optimal performance and resilience.

Install following on jenkins server terminal:

java

jenkins

docker

terraform

awscli

kubectl

eksctl

Step1: Launch an ec2 instance of t2.large type with 30 gb storage. install java and jenkins on that server.

connect to instance through ssh or ec2 connect. and run the follwoing commands.

vim script1.sh

####script1.sh

#!/bin/bash

# Update package lists and install necessary packages

sudo apt update

sudo apt install -y fontconfig openjdk-17-jre

# Wait for a moment for Java installation to take effect

sleep 5

# Check Java version

java -version

# Download and install Jenkins

sudo wget -O /usr/share/keyrings/jenkins-keyring.asc https://pkg.jenkins.io/debian-stable/jenkins.io-2023.key

echo deb [signed-by=/usr/share/keyrings/jenkins-keyring.asc] https://pkg.jenkins.io/debian-stable binary/ | sudo tee /etc/apt/sources.list.d/jenkins.list > /dev/null

sudo apt-get update

sudo apt-get install -y jenkins

# Start and enable Jenkins service

sudo systemctl status jenkins

sudo systemctl enable jenkins

sudo systemctl start jenkins

sudo chmod 777 script1.sh change the executable permissions of file.

ls

sh script1.sh

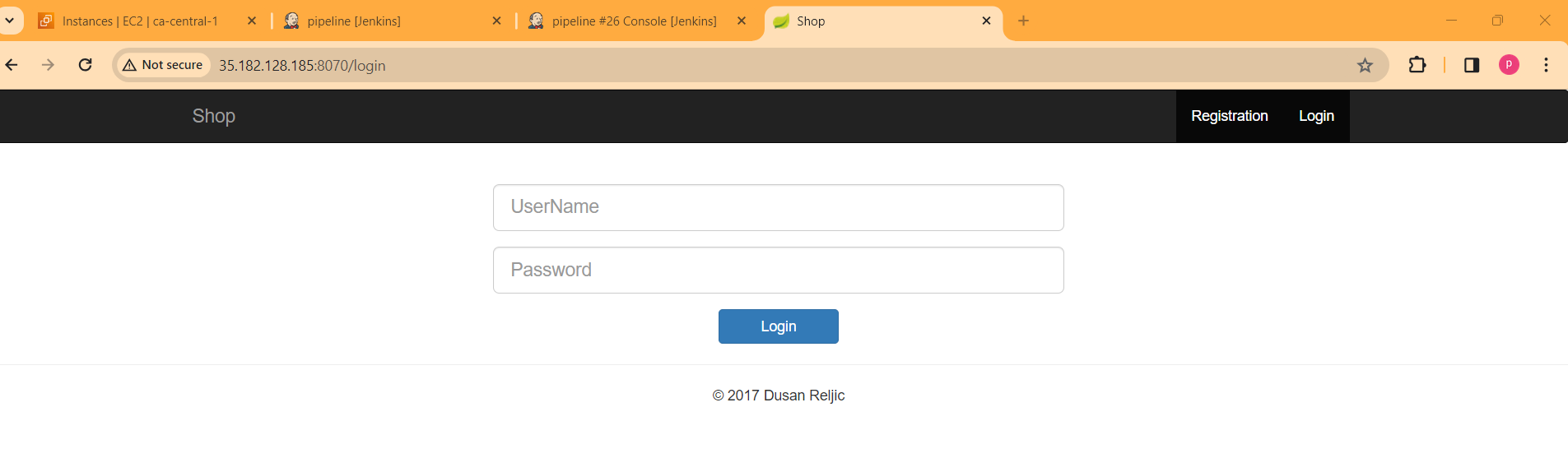

copy the publc ip address of ec2 instance http://35.182.128.185:8080/ paste in new browser.

sudo cat /var/lib/jenkins/secrets/initialAdminPassword

paste the password in jenkins server and start the jenkins.

Install docker on jenkins server :

## script2.sh

#!/bin/bash

sudo apt-get update

sudo apt-get install docker.io -y

sudo usermod -aG docker $USER #my case is ubuntu

newgrp docker

sudo chmod 777 /var/run/docker.sock

sudo apt install docker-compose

Add docker credentials in jenkins global credentials:

Dashboard-> Manage jenkins -> credentials -> system -> global credentials

Step2: To add a Jenkins slave node (EC2 instance) to your Jenkins server, follow these steps:

create an ec2 ubuntu instance of t2.medium type. and connect to it through ssh.

install java on that server.

#!/bin/bash

# Update package lists and install necessary packages

sudo apt update

sudo apt install -y fontconfig openjdk-17-jre

# Wait for a moment for Java installation to take effect

sleep 5

# Check Java version

java -version

Create a folder jenkins slave, give execution permissions to this folder.

Go to folder jenkins-slave and generate key paiir.

ssh-keygen

cd /root/.ssh/

cat id_rsa

Copy the private key. and upload it to jenkins credential.

-----BEGIN OPENSSH PRIVATE KEY-----

b3BlbnNzaC1rZXktdjEAAAAABG5vbmUAAAAEbm9uZQAAAAAAAAABAAABlwAAAAdzc2gtcn

NhAAAAAwEAAQAAAYEAwYDA4t5iVl6S3caGQfR1jRfTxfdHfsKzDf2A+lCfigl4DFLnBx/u

kdkbKJchSkGLOnhsZOjCveisgU+/u5FyQvIJPflYGSTau9v5w076poPV3DxPzTHwOhtPLf

vsnSC9irDlsHKoPifm5rrFFNd6xjRGdB7PiLUt5EDBFiJa2GmQuB6N60xH6z6cGsuwnEVU

ZIYqSIJAsZrvW6P3jwBPxcPOViILRe0PrF52kuQJtvmt6Y8bdtuQRNAgmNGLI7Sw7PaBNR

0gB6fpJfuAnrTH0TNQirhcLXEAYQRJ/wSjpo6znTZuXD+XexbYius13Xpjr9Kul+F44yEE

s1ZxLc5txrFsQn9HmRACUxLyOcGFMDb5nDWO88jUlNLu2q+i+ZXUUJ/Dlj+unRSUP8iErX

GHLpm+j9lkjg0dnf9p/Rn2B0+ZprUO+wXYkZ9XUNnPGOOxa/NMiVAh8jAt1UZk9oTUIxHr

Qv3TXQMeo0y07k2BGODUiC6gmKHDxithSaVbQYCTAAAFkFNtSmVTbUplAAAAB3NzaC1yc2

EAAAGBAMGAwOLeYlZekt3GhkH0dY0X08X3R37Csw39gPpQn4oJeAxS5wcf7pHZGyiXIUpB

izp4bGTowr3orIFPv7uRckLyCT35WBkk2rvb+cNO+qaD1dw8T80x8DobTy377J0gvYqw5b

ByqD4n5ua6xRTXesY0RnQez4i1LeRAwRYiWthpkLgejetMR+s+nBrLsJxFVGSGKkiCQLGa

71uj948AT8XDzlYiC0XtD6xedpLkCbb5remPG3bbkETQIJjRiyO0sOz2gTUdIAen6SX7gJ

60x9EzUIq4XC1xAGEESf8Eo6aOs502blw/l3sW2IrrNd16Y6/SrpfheOMhBLNWcS3Obcax

bEJ/R5kQAlMS8jnBhTA2+Zw1jvPI1JTS7tqvovmV1FCfw5Y/rp0UlD/IhK1xhy6Zvo/ZZI

4NHZ3/af0Z9gdPmaa1DvsF2JGfV1DZzxjjsWvzTIlQIfIwLdVGZPaE1CMR60L9010DHqNM

tO5NgRjg1IguoJihw8YrYUmlW0GAkwAAAAMBAAEAAAGAD+5dcxA4wRWjj9YYGEwGdZRVn4

HesAVa9Tbpgd0AwD5L/5pqYkAdxdeTEIIeKofqQj5x5ijyXOz6xDa1pBKzI+cwyEUZchCC

YF8ZrsZ3hR1rjmikxVHBjncly1+8uP8Iu+duhwVu6+x1v99L+eL3vQ5xZ9QK5XLNPB1HXb

QnDemQZv2U/59VzdISIMlOWWoCgGLkuPgzAqxX3TqgV3SrcgPPSXDKGXq4BJ/5BoT3IR+9

/XGxItWsM2N98OGnoUvvSXkkj4MXBGSsE8sRW4CuxYyf5v0bpwKsLck4PZFPUDSMtRoNcm

CDIF7BXvLZfuApQlg6e+JpNs9gI/tV5nL1ImOIS1i70lVqV+BsfJCEPtcmukgd8nn2j7Rv

03QMIvadkHdjhPbGF0yL2NdfLcmgvsUNvZ37VflVUywmiMqvqqB099QJKlrtO+PQ1qeReO

4ClMnIVPHCuqrWB3mFII2tcLou6uLpNqHp7Kkkm2GvyOqbI69ON3BEYOl842U6SusxAAAA

wQDwdiOKm506nmfzTg91VSMZVubtHNPnsXCk/SngOEooUrqz8KKHqba75HzsHY3L9qyZKV

To9HpKsXkoGaWwY381rB/MzTmZ1+pfO2KpB1Jg6PpOXVXnr2ry2ue3Ar67ER2KWeNWVPI1

68C08kgQyAkJdrGjwcKc4WZRK4O2enX2AFRVMXtE0YjRXluwDkc4RjnCTw/llw+Ey/9Mgf

xD0yrawI+Wjrng+KqUQr3QmZ0vfA4YFhF4BTE49oZYuit0JTYAAADBAP1M7g2uvBGSyBF4

PvE9lUDqs81dWMPpXsg+YKWdpBGCx58pKItFus5zy/btv8IzK1hkG8MeaOkO5P6IPsZypz

GK/SiKFC2WSMz8nfyNcfn5dIj63PXpOAofAi8y5PPX7M8mV+4wZ0pTHLIaBGesj8gVrNm1

c5+ez4h5VugM6w4HyIX/3aZRE73Wl01BZRJ2lFFq7GcQAPa3z2oM88rgdBJIVTnVgxKnt4

3GbXcRnYLeqbXyCJC5dYQhHeetzwmFeQAAAMEAw5CuHpNTTQBnpB+RAtFxDdpf/wUC/tNI

MA7NZerrE7+SBUpvgCGApsp5CWQw/dSyNHkdVb1VKF2Md0skauRcAO4xyrDfEwqrj5pqdb

4SQwgh7NZief6/76KhEBPkMyCU6kSNFrxvqg6LDQvrli7OzMU8LMyEZN7Y6szcfbSJCZB1

l4bi3n/OvHxwYNJ/SecGOoqJWGGkDcn5QBYRDupLXDI4YAXAteDXI4yj1UHHuQEzLHT2un

gjL04y5JxLEa9rAAAAFXJvb3RAaXAtMTcyLTMxLTEzLTE1NwECAwQF

-----END OPENSSH PRIVATE KEY-----

Go to jenkins server-> manage jenkins ->Credentials -> system -> Global -> add credentials:

Manage jenkins-> Nodes-> New node

Number of executors means : number of piepline run at a time .

Remote root directory will be the path of workspace where piepline build will be same on slave

new node slave will be added.

Makes the machine temporarily offline.

Copy public key and edit autherized key and paste public key in autherized key.

Go to launch agent

test node slave is added and with 3 idle means 3 pieplines can run at a time.

STEP 3. Dasboard-> Manage jenkins-> Plugins-> available plugins :(install without restart)

SonarQube scanner

jdk

Docker

Docker Commons

Docker pipeline

Docker-build-step

CloudBees Docker Build and Publish

OWASP (sercuity plugin)

Now configure tools : jdk

sonarQube

Maven

docker

Add security tool dependency check:

Apply and save.

Add github credentials in jenkin's credentials (make sure instaed of use password of github use github personal access --classic token) , so that that job we are going to create will able to fetch code from github.

Step 4: create job1:

use pipeline syntax generator to create stages pipeline:

pipeline {

agent any

tools {

// Define tools (JDK and Maven)

jdk 'jdk17'

maven 'maven3'

}

stages {

stage('git checkout') {

steps {

// Checkout code from the Git repository

git branch: 'main', credentialsId: 'github', url: 'https://github.com/Pardeep32/Ekart.git'

}

}

stage('Compile') {

steps {

// Compile the code using Maven

sh 'mvn clean compile -DskipTests=true'

}

}

stage('OWASP scan') {

steps {

// Print a debug message before starting the OWASP scan

echo 'Starting OWASP Dependency-Check scan'

// Perform OWASP Dependency-Check scan

dependencyCheck additionalArguments: '--scan ./ --format HTML', odcInstallation: 'DP-Check'

// Publish Dependency-Check report

dependencyCheckPublisher pattern: '**/dependency-check-report.xml'

// Print a debug message after completing the OWASP scan

echo 'OWASP Dependency-Check scan completed'

}

}

}

}

save and apply and build this pipeline. Build the pipeline

dependency-check-report.html and xml both are generated.

STEP5: Now Run sonarqube docker constainer on jenkins server:

docker run -d --name sonar-ctr -p 9000:9000 sonarqube:lts-community

make sure 9000 port is added in sercurity group of jenkins server.

Public_ip_address of ec2 instance:9000

username: admin and password:admin

go to administration -> sercurity -> user : in order to generate token.

Copy that token. go to jenins -> manage jenkins -> credentials -> system -> global credentials: add that token as a secret text with id of sonar-token.

configure sonarqube server in jenkins:

add stage 4 in pipeline:

stage('Sonar analysis') {

steps {

// Execute SonarQube analysis

withSonarQubeEnv('sonar-server') {

sh '''$SCANNER_HOME/bin/sonar-scanner \

-Dsonar.projectName=Shopping-cart \

-Dsonar.java.binaries=. \

-Dsonar.projectKey=Shopping-cart'''

}

}

}

STEP 6: Build application through docker in stage 5 of pipeline.

This is my dockerfile in repo:

Create a docker image from this file and push that image to dockerhub. (make sure you have a dockerhub account).

stage('docker build and push') {

steps {

script {

// Build and push Docker image

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

sh 'docker build -t shopping-cart -f docker/Dockerfile .'

// Image name should be in the format: username/repository:tag

sh 'docker tag shopping-cart pardeepkaur/shopping-cart:latest'

sh 'docker push pardeepkaur/shopping-cart:latest'

}

}

}

}

Now run the docker conatiner: add this stage in pipeline

stage('docker run'){

steps{

script{

withDockerRegistry(credentialsId: 'docker', toolName: 'docker')

sh 'docker run -d --name shop -p 8070:8070 pardeepkaur/shopping-cart:latest'

}

}

}

###complete pipeline

pipeline {

agent any

tools {

// Define tools (JDK and Maven)

jdk 'jdk17'

maven 'maven3'

}

environment {

SCANNER_HOME = tool 'sonar-scanner'

}

stages {

stage('git checkout') {

steps {

// Checkout code from the Git repository

git branch: 'main', credentialsId: 'github', url: 'https://github.com/Pardeep32/Ekart.git'

}

}

stage('Compile') {

steps {

// Compile the code using Maven

sh 'mvn clean compile -DskipTests=true'

}

}

stage('OWASP scan') {

steps {

// Print a debug message before starting the OWASP scan

echo 'Starting OWASP Dependency-Check scan'

// Perform OWASP Dependency-Check scan

dependencyCheck additionalArguments: '--scan ./ --format HTML', odcInstallation: 'DP-Check'

// Publish Dependency-Check report

dependencyCheckPublisher pattern: '**/dependency-check-report.html'

// Print a debug message after completing the OWASP scan

echo 'OWASP Dependency-Check scan completed'

}

}

stage('Sonar analysis') {

steps {

// Execute SonarQube analysis

withSonarQubeEnv('sonar-server') {

sh '''$SCANNER_HOME/bin/sonar-scanner \

-Dsonar.projectName=Shopping-cart \

-Dsonar.java.binaries=. \

-Dsonar.projectKey=Shopping-cart'''

}

}

}

stage('Build') {

steps {

// Compile the code using Maven

sh 'mvn clean package -DskipTests=true'

}

}

stage('docker build and push') {

steps {

// Build and push Docker image

script {

// Call the withDockerRegistry step with a body

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

// Inside the withDockerRegistry block, define Docker-related actions

sh 'docker build -t shopping-cart -f docker/Dockerfile .'

// Image name should be in the format: username/repository:tag

sh 'docker tag shopping-cart pardeepkaur/shopping-cart:latest'

sh 'docker push pardeepkaur/shopping-cart:latest'

}

}

}

}

stage('docker run') {

steps {

script {

// Run the Docker container

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

sh 'docker run -d --name shop -p 8070:8070 pardeepkaur/shopping-cart:latest'

}

}

}

}

}

}

STEP 7: Now deploy application on eks cluster.

first install terraform on jenkins server.

#!/bin/bash

#install terraform

sudo apt install wget -y

wget -O- https://apt.releases.hashicorp.com/gpg | sudo gpg --dearmor -o /usr/share/keyrings/hashicorp-archive-keyring.gpg

echo "deb [signed-by=/usr/share/keyrings/hashicorp-archive-keyring.gpg] https://apt.releases.hashicorp.com $(lsb_release -cs) main" | sudo tee /etc/apt/sources.list.d/hashicorp.list

sudo apt update && sudo apt install terraform

Before creation eks cluster through jenkins on AWS, attach an iam role with ec2 instance of jenkins server. IAM role have addministartion access which allow it to create eks cluster in aws account.

STEP 8: create job2:

pipeline{

agent any

stages {

stage('Checkout from Git'){

steps{

git branch: 'main', url: 'https://github.com/Pardeep32/Ekart.git'

}

}

stage('Terraform version'){

steps{

sh 'terraform --version'

}

}

stage('Terraform init'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform init'

}

}

}

stage('Terraform validate'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform validate'

}

}

}

stage('Terraform plan'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform plan'

}

}

}

stage('Terraform apply/destroy'){

steps{

dir('EKS_TERRAFORM') {

sh 'terraform ${action} --auto-approve'

}

}

}

}

}

Run the pipeline in order to create eks cluster.

install kubectl on jenkins server:

### script4.sh

#!/bin/bash

sudo apt update

sudo apt install curl

curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

Install aws cli on jenkins server:

#install Aws cli script5.sh

#!/bin/bash

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip"

sudo apt-get install unzip -y

unzip awscliv2.zip

sudo ./aws/install

install kubectl

# Install kubectl

### script6.sh

#!/bin/bash

sudo apt update

sudo apt install curl -y

curl -LO https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/amd64/kubectl

sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

kubectl version --client

this pipeline will create a eks cluster.

Install kubernetes plugins on jenkins:

Now deploy the container in cluster: go to the Jenkins Instance

Give this command to configure eks cluster.

aws eks update-kubeconfig --name EKS_CLOUD --region ca-central-1

It will Generate an Kubernetes configuration file.

Here is the path for config file.

copy this config file and paste on local with Secretfile11.txt. Now upload this file in global credential ->secret file with id k8s.

add kubernete stage in pipeline:

pipeline {

agent any

tools {

// Define tools (JDK and Maven)

jdk 'jdk17'

maven 'maven3'

}

environment {

SCANNER_HOME = tool 'sonar-scanner'

}

stages {

stage('git checkout') {

steps {

// Checkout code from the Git repository

git branch: 'main', credentialsId: 'github', url: 'https://github.com/Pardeep32/Ekart.git'

}

}

stage('Compile') {

steps {

// Compile the code using Maven

sh 'mvn clean compile -DskipTests=true'

}

}

stage('OWASP scan') {

steps {

// Print a debug message before starting the OWASP scan

echo 'Starting OWASP Dependency-Check scan'

// Perform OWASP Dependency-Check scan

dependencyCheck additionalArguments: '--scan ./ --format HTML', odcInstallation: 'DP-Check'

// Publish Dependency-Check report

dependencyCheckPublisher pattern: '**/dependency-check-report.html'

// Print a debug message after completing the OWASP scan

echo 'OWASP Dependency-Check scan completed'

}

}

stage('Sonar analysis') {

steps {

// Execute SonarQube analysis

withSonarQubeEnv('sonar-server') {

sh '''$SCANNER_HOME/bin/sonar-scanner \

-Dsonar.projectName=Shopping-cart \

-Dsonar.java.binaries=. \

-Dsonar.projectKey=Shopping-cart'''

}

}

}

stage('Build') {

steps {

// Compile the code using Maven

sh 'mvn clean package -DskipTests=true'

}

}

stage('docker build and push') {

steps {

// Build and push Docker image

script {

// Call the withDockerRegistry step with a body

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

// Inside the withDockerRegistry block, define Docker-related actions

sh 'docker build -t shopping-cart -f docker/Dockerfile .'

// Image name should be in the format: username/repository:tag

sh 'docker tag shopping-cart pardeepkaur/shopping-cart:latest'

sh 'docker push pardeepkaur/shopping-cart:latest'

}

}

}

}

stage('docker run') {

steps {

script {

// Run the Docker container

withDockerRegistry(credentialsId: 'docker', toolName: 'docker') {

sh 'docker run -d --name shop -p 8070:8070 pardeepkaur/shopping-cart:latest'

}

}

}

}

stage('Deploy to Kubernetes') {

steps {

script {

withKubeConfig(

caCertificate: '',

clusterName: '',

contextName: '',

credentialsId: 'k8s', // This should refer to your Kubernetes credentials ID

namespace: '',

restrictKubeConfigAccess: false,

serverUrl: ''

) {

sh 'kubectl apply -f deploymentservice.yml'

}

}

}

}

}

}

Terraform state will be updated in backend s3 bucket.

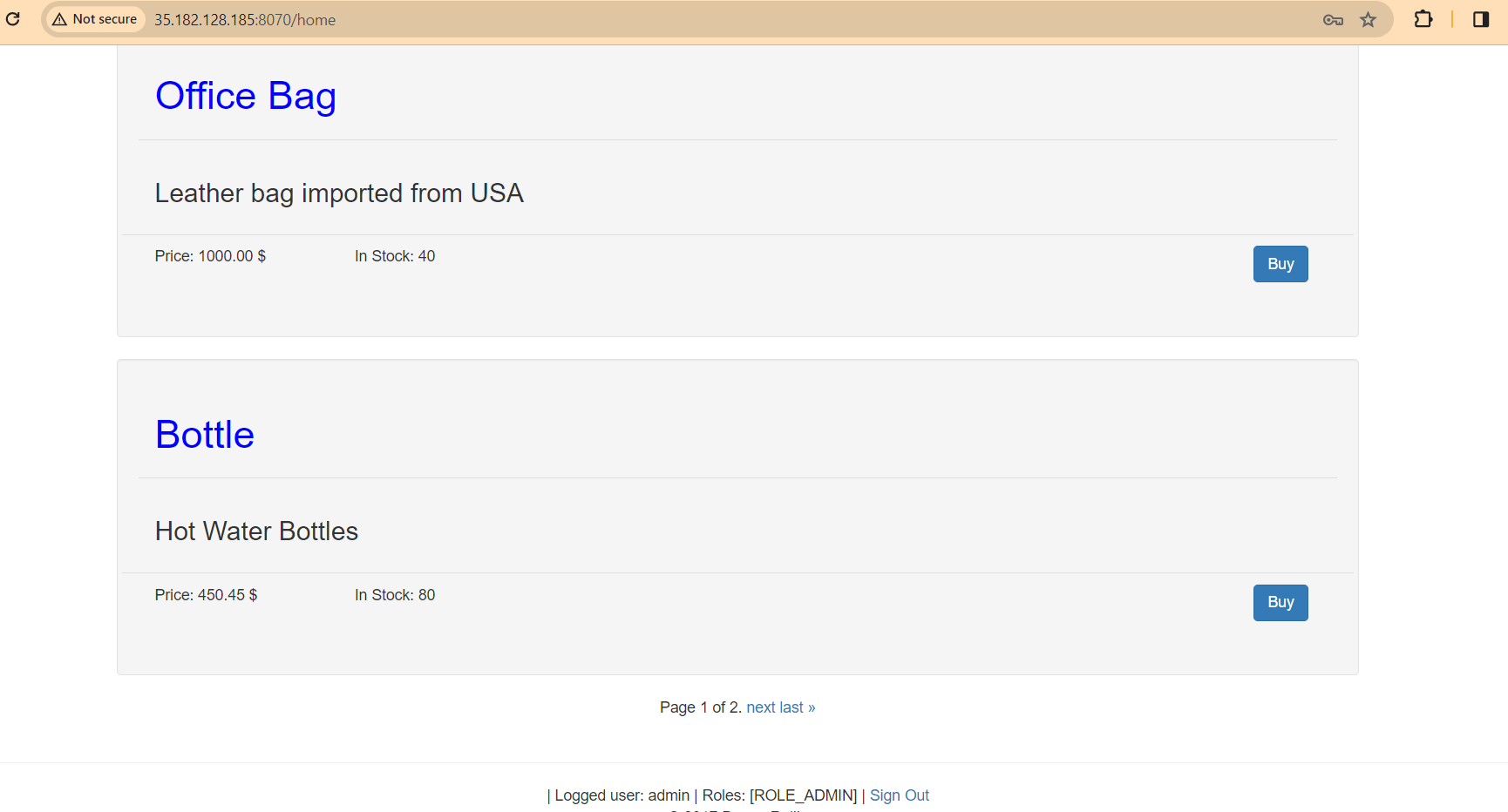

Public_ip_address:8070

kubectl get nodes

kubectl get pods

kubectl get all

This is a report o sonarqube scanner.

Kubernetes offers two essential features: autoscaling and autohealing.

Autoscaling: Automatically adjusts the number of pods in a deployment based on CPU or custom metrics. This ensures optimal resource usage and performance during fluctuating workloads while minimizing costs.

Autohealing: Automatically detects and recovers from pod failures or issues. Kubernetes restarts or reschedules unhealthy pods, ensuring high availability and reliability without manual intervention.

These features, combined with readiness and liveness probes, enable developers to build resilient, self-healing applications that adapt to changing conditions effortlessly, minimizing downtime and ensuring consistent performance.

Best practice recommends implementing a Jenkins pipeline to destroy an Amazon EKS cluster. This ensures consistency, traceability, and automation in the cluster lifecycle management process.

By integrating with Jenkins, the destruction process can be triggered automatically, allowing for seamless integration with other CI/CD workflows. This ensures that resources are efficiently managed and cleaned up when no longer needed, optimizing cost and resource allocation.

I hope this article helps you. Thank you for reading.