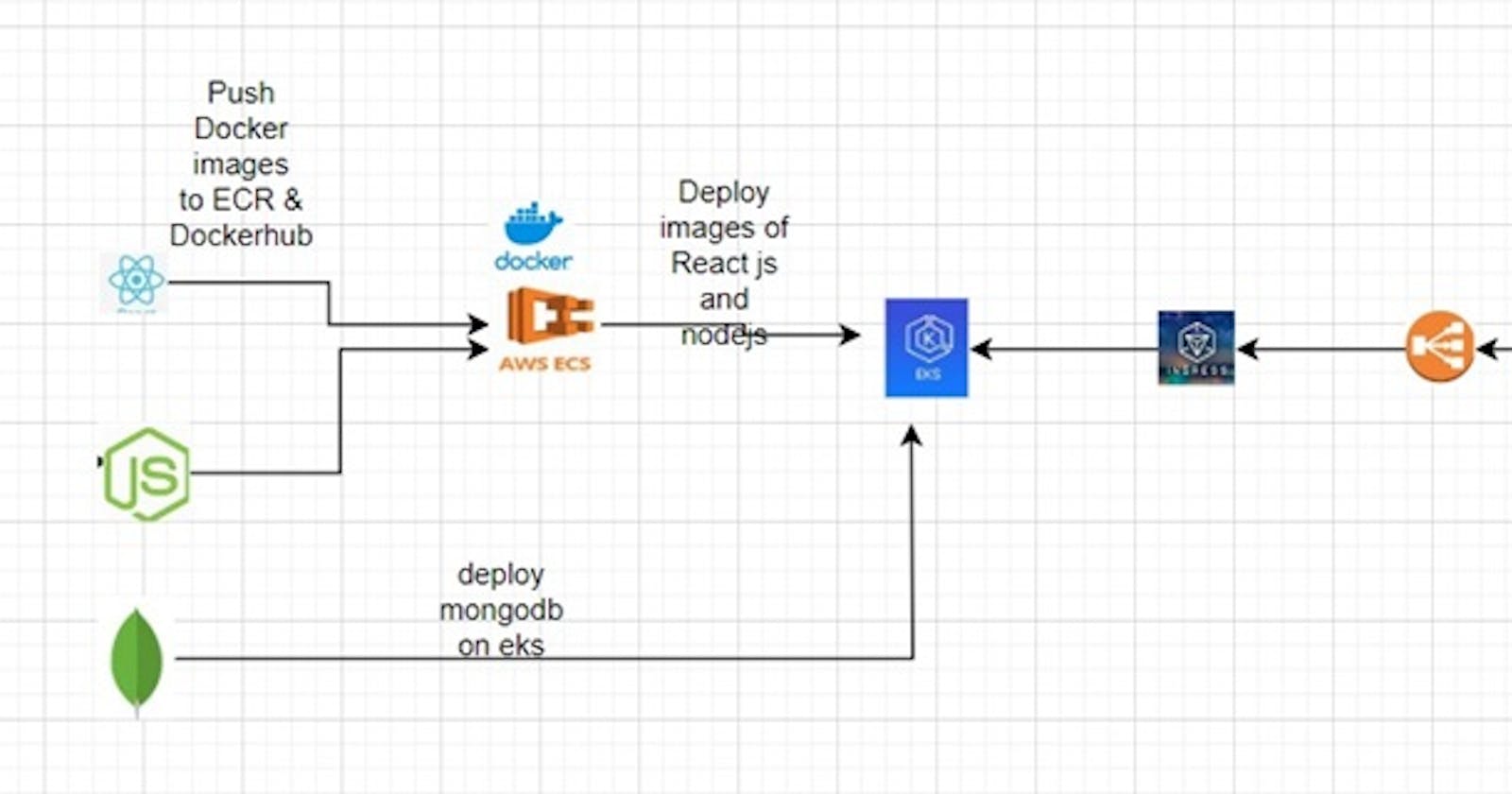

A three-tier architecture divides applications into three interconnected components: Presentation (Frontend), Application (Backend), and Data (Database). This modular approach ensures scalability, maintainability, and separation of concerns. Leveraging Amazon EKS for container orchestration enhances deployment efficiency.

Advantages of Amazon EKS:

Container Orchestration: Simplifies the deployment, management, and scaling of containerized applications through Kubernetes.

Scalability: Seamlessly scales containerized applications based on demand, optimizing resource utilization.

Managed Service: Fully managed service eliminates manual intervention in cluster management, upgrades, and scaling.

Integration with AWS Services: Integrates seamlessly with various AWS services, facilitating deployment within the broader AWS ecosystem.

Technologies Overview:

Section 1: Understanding Three-Tier Architecture

Three Tiers:

Presentation (Frontend): Manages the user interface using React.js, allowing for dynamic and interactive user experiences.

Application (Backend): Processes user requests, communicates with the database, and utilizes Node.js for scalability and speed.

Data (Database): Stores and retrieves data with MongoDB, a NoSQL database known for flexibility and scalability.

Benefits:

Scalability: Each tier can be independently scaled for improved performance under varying loads.

Maintainability: Changes in one tier do not impact others, simplifying maintenance.

Section 2: Technologies Overview

2.1 React.js (Frontend)

Overview:

- JavaScript library for building user interfaces efficiently.

Key Concepts:

- Components, State, Props.

2.2 Node.js (Backend)

Overview:

- Server-side runtime for scalable backend applications.

Advantages:

- Non-blocking I/O, Event-Driven Architecture.

2.3 MongoDB (Database)

Overview:

- NoSQL database for flexible and scalable data storage.

Key Features:

- Document-Oriented, Scalability.

Next Steps on EC2 Instance:

Build and Deploy:

Navigate to the cloned GitHub repository.

Install Docker to run Docker files.

Create Docker images for frontend and backend. Dockerfiles for frontend and backend are available at https://github.com/Pardeep32/TWSThreeTierAppChallenge.git

Push images to Amazon ECR.

Create an ubuntu EC2 insatnce of t2. micro and coonect to it through ssh.

Select the instance, go to connect:

Copy the key and go to terminal and path where the key is downloaded and paste the command. Now, you are connected to server

Clone the repo on server.

git hub link : git clonehttps://github.com/LondheShubham153/TWSThreeTierAppChallenge.git

Frontend

create Docker file for frontend: this Dockerfile sets up an environment to run a Node.js application by installing dependencies and copying the application code into the container, and then it starts the application using npm start

FROM node:14 ## specifies the base image to use for this Dockerfile.

WORKDIR /usr/src/app # sets the working directory inside the container to /usr/src/app. This is where the application code will be copied and where subsequent commands will be executed.

COPY package*.json ./ #This line copies the package.json and package-lock.json (if it exists) files from the host machine to the current directory in the container. These files typically contain metadata about the Node.js project and a list of dependencies.

RUN npm insatll #installs the dependencies listed in the package.json file. It's executed in the context of the container, so it installs the dependencies within the container environment.

COPY . . #copies the rest of the application code from the host machine to the current directory in the container. This includes all files and folders in the project directory.

CMD [ "npm", "start" ] #default command to run when the container starts. It specifies running the npm start command, which typically starts the Node.js application as defined in the start script within the package.json file.

sudo chown $USER /var/run/docker.sock

Create docker image from docker file:

docker build -t frondendimage .

The size of frontendimage is 1.12GB.

Multi-stage builds in Docker enable developers to optimize image sizes by compartmentalizing build-time dependencies and only including necessary artifacts in the final production image, thus enhancing efficiency and reducing resource consumption. Create a new docker image use multistage in order to reduce the size of docke image.

Create a Dockerfile.

# Stage 1: Big-stage (for building the application)

FROM node:14 AS big-stage

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

COPY . .

# Perform any necessary build steps here if required

# Stage 2: Slim-stage (for the production image)

FROM node:14-slim AS slim-stage

WORKDIR /usr/src/app

COPY --from=big-stage /usr/src/app .

RUN npm install --only=production

CMD [ "npm", "start" ]

The size of multilstage image is reduced to 356MB.

Run the docker container from docker image:

docker run -d -p 3000:3000 multistagefrontend:latest

docker ps

Go to instance's security group and add 3000 in inbound rule.

Copy public ip of ec2:3000 paste in browser:

it ensures that my docker build is correct.

kill the docker conatiner:

docker kill conatiner_id

docker rm conatiner_id

Now push the image to DockerHub and ECR:(its upto you you can push to DockerHub only or ECR only)

Push image to DockerHub:

To push your Docker image to Docker Hub, follow these steps:

Log in to Docker Hub:

docker loginYou will be prompted to enter your Docker Hub username and password.

Tag your Docker image with your Docker Hub username and the desired repository name:

docker tag your_image_name your_dockerhub_username/repository_name:tagReplace

your_image_namewith the name of the Docker image you want to push,your_dockerhub_usernamewith your Docker Hub username,repository_namewith the name you want to give to your repository on Docker Hub, andtagwith the desired tag (e.g.,latest).Push the tagged image to Docker Hub:

docker push your_dockerhub_username/repository_name:tagThis command will upload your Docker image to Docker Hub. Depending on the size of your image and your internet connection speed, this may take some time.

Once the push is complete, your Docker image will be available on Docker Hub under your specified repository. Others can then pull your image using the same repository name and tag.

Now push the images to ECR:

Before pushing Docker images to Amazon Elastic Container Registry (ECR), ensure you have the following prerequisites:

AWS Account: You need an AWS account to access ECR.

AWS CLI: Install and configure the AWS Command Line Interface (CLI) on your local machine. You can download it from the AWS website and configure it with your AWS credentials using the

aws configurecommand.install AWSCLI

curl "https://awscli.amazonaws.com/awscli-exe-linux-x86_64.zip" -o "awscliv2.zip" sudo apt install unzip unzip awscliv2.zip sudo ./aws/install -i /usr/local/aws-cli -b /usr/local/bin --update aws configure

To create AWS access key and SEcret access key follwoing steps:

To create an AWS access key and secret access key, follow these steps:

Sign in to the AWS Management Console: Go to https://aws.amazon.com/ and sign in to the AWS Management Console using your AWS account credentials.

Open the IAMConsole: In the AWS Management Console, navigate to the IAM (Identity and Access Management) service. You can find it under the "Security, Identity, & Compliance" section.

Navigate to Users: In the IAM console, click on "Users" in the left-hand menu. This will show you a list of IAM users in your AWS account.

Select the User: Click on the IAM user for which you want to create the access key. If you haven't created a user yet, you can create one by clicking on "Add user" and following the prompts. Give addminstration Access to IAM user in directly ttach policy.

Open the Security Credentials Tab: Once you have selected the user, go to the "Security credentials" tab. Here, you can manage the security credentials for the selected user.

Create Access Key: Under the "Access keys" section, click on "Create access key". This will generate a new access key and secret access key pair for the user.

Download or Copy the Access Key: Once the access key is generated, you will be shown the access key ID and the secret access key. You can download the CSV file containing these credentials, or you can copy them to a secure location.

Store Access Key Securely: It's crucial to store the access key and secret access key securely. Do not share them publicly.

Docker: Make sure you have Docker installed on your local machine. This is necessary for building and tagging Docker images.

ECR Repository: Create a repository in Amazon ECR where you'll push your Docker images. You can do this through the AWS Management Console or using the AWS CLI.

Select view push commands and follow each step

Copy the above commands one by one.

Image is pushed to ECR public repo.

Backend

Create docker file.

# Stage 1: Build the application FROM node:14 AS builder WORKDIR /usr/src/app # Copy package files and install dependencies COPY package*.json ./ RUN npm install # Copy application source code COPY . . # Stage 2: Create the production image FROM node:14-slim WORKDIR /usr/src/app # Copy built application from the previous stage COPY --from=builder /usr/src/app . CMD ["node", "index.js"]

Now push this command to dockerhub:

Now push the image to ECR follow the previous steps that followed for frontend: Create backend public repo and use view push commads.

Threetierbackend:latest image will be uploaded to ECR.

Now run the backend container:

backend conatiner is running properly but it is not connected to mongodb.

To connect backend with mongodb, create a Kubernetes cluster through EKS:

Before setting up an Amazon EKS cluster, ensure you have the following prerequisites:

kubectl: Install kubectl, the Kubernetes command-line tool, which you'll use to interact with the EKS cluster once it's created.

curl -o kubectl https://amazon-eks.s3.us-west-2.amazonaws.com/1.19.6/2021-01-05/bin/linux/amd64/kubectl chmod +x ./kubectl sudo mv ./kubectl /usr/local/bin kubectl version --short --clientEKSctl: eksctl is a command-line utility provided by AWS to simplify the creation, management, and operations of Amazon EKS clusters. It abstracts away many of the manual steps involved in setting up an EKS cluster, making it easier and faster to create Kubernetes clusters on AWS.

curl --silent --location "https://github.com/weaveworks/eksctl/releases/latest/download/eksctl_$(uname -s)_amd64.tar.gz" | tar xz -C /tmp sudo mv /tmp/eksctl /usr/local/bin eksctl version

Setup eks cluster:

eksctl create cluster --name three-tier-cluster --region ca-central-1 --node-type t2.medium --nodes-min 2 --nodes-max 2

aws eks update-kubeconfig --region ca-central-1 --name three-tier-cluster

kubectl get node

Cloud formation will create a stack

Eks cluster is ready

EC2 instances of t2.medium is ready.

Before creating frontend and backend , create manifest files for mongodb.

First of all, create namespace In Kubernetes, a namespace is a way to logically divide cluster resources into virtual clusters. It provides a scope for names and ensures that the names of resources within a namespace are unique within that namespace. Namespaces are a way to organize and isolate different projects, teams, or applications within the same Kubernetes cluster.

kubectl create namespace three-tier

kubectl get namespace

Create deployment.yaml for mongodb

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: three-tier

name: mongodb

spec:

replicas: 1

selector:

matchLabels:

app: mongodb

template:

metadata:

labels:

app: mongodb

spec:

containers:

- name: mon

image: mongo:4.4.6

command:

- "numactl"

- "--interleave=all"

- "mongod"

- "--wiredTigerCacheSizeGB"

- "0.1"

- "--bind_ip"

- "0.0.0.0"

ports:

- containerPort: 27017

env:

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: mongo-sec

key: username

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mongo-sec

key: password

volumeMounts:

- name: mongo-volume

mountPath: /data/db

volumes:

- name: mongo-volume

persistentVolumeClaim:

claimName: mongo-volume-claim

The commands used in mongodb deployment are explained as below

numactl: A tool used to control NUMA (Non-Uniform Memory Access) policy. It's used here to manage how memory is allocated.

interleave=all: Ensures memory is interleaved across all available NUMA nodes.

mongod: The command to start the MongoDB server.

wiredTigerCacheSizeGB: Sets the size of the WiredTiger cache, a storage engine used by MongoDB.

0.1: Specifies the cache size as 0.1 GB.

bind_ip: Determines the network interfaces MongoDB will listen on.

0.0.0.0: Tells MongoDB to listen on all network interfaces.

sercret.yaml

apiVersion: v1

kind: Secret

metadata:

namespace: three-tier

name: mongo-sec

type: Opaque

data:

password: cGFzc3dvcmQxMjM= #Three-Tier-Project

username: YWRtaW4= #admin

pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: mongo-pv

namespace: three-tier

spec:

capacity:

storage: 1Gi

volumeMode: Filesystem

accessModes:

- ReadWriteOnce

hostPath:

path: /data/db

pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mongo-volume-claim

namespace: three-tier

spec:

accessModes:

- ReadWriteOnce

storageClassName: ""

resources:

requests:

storage: 1Gi

service.yaml

apiVersion: v1

kind: Service

metadata:

namespace: three-tier

name: mongodb-svc

spec:

selector:

app: mongodb

ports:

- name: mongodb-svc

protocol: TCP

port: 27017

targetPort: 27017

kubectl apply -f deployment.yaml

kubectl apply -f service.yaml

kubectl apply -f secrets.yaml

kubectl apply -f pv.yaml

kubectl apply -f pvc.yaml

kubectl get deployment -n three-tier

kubectl get service -n three-tier

kubectl get pv -n three-tier

kubectl get pvc -n three-tier

kubectl get sercret -n three-tier

Now backend in cluster

deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: api

namespace: three-tier

labels:

role: api

env: demo

spec:

replicas: 2

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 25%

selector:

matchLabels:

role: api

template:

metadata:

labels:

role: api

spec:

imagePullSecrets:

- name: ecr-registry-secret

containers:

- name: api

image: public.ecr.aws/y2f6v9b3/threetierbackend:latest #change the image

imagePullPolicy: Always

env:

- name: MONGO_CONN_STR

value: mongodb://mongodb-svc:27017/todo?directConnection=true

- name: MONGO_USERNAME

valueFrom:

secretKeyRef:

name: mongo-sec

key: username

- name: MONGO_PASSWORD

valueFrom:

secretKeyRef:

name: mongo-sec

key: password

ports:

- containerPort: 3500

livenessProbe:

httpGet:

path: /ok

port: 3500

initialDelaySeconds: 2

periodSeconds: 5

readinessProbe:

httpGet:

path: /ok

port: 3500

initialDelaySeconds: 5

periodSeconds: 5

To change image in deployment.yaml file got o ECR -> Public registry -> Repositories -> backend -> view public listing.

service.yaml

apiVersion: v1

kind: Service

metadata:

name: api

namespace: three-tier

spec:

ports:

- port: 3500

protocol: TCP

type: ClusterIP

selector:

role: api

kubectl get pods -n three-tier

kubectl logs api-6d787979bd-ds5xw -n three-tier

Frontend tier deployment on cluster

deployemnt.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: frontend

namespace: three-tier

labels:

role: frontend

env: demo

spec:

replicas: 1

strategy:

type: RollingUpdate

rollingUpdate:

maxSurge: 1

maxUnavailable: 25%

selector:

matchLabels:

role: frontend

template:

metadata:

labels:

role: frontend

spec:

imagePullSecrets:

- name: ecr-registry-secret

containers:

- name: frontend

image: public.ecr.aws/y2f6v9b3/threetierfrontend:latest

imagePullPolicy: Always

env:

- name: REACT_APP_BACKEND_URL

value: "http://mongodb-svc:3500/api/tasks"

ports:

- containerPort: 3000

~ ~ ~

If you dont have domain name then Here's how you can modify the REACT_APP_BACKEND_URL in your frontend deployment:

yamlCopy codeenv:

- name: REACT_APP_BACKEND_URL

value: "http://mongodb-svc:3500/api/tasks"

Once you set up the Ingress and ALB, you'll update this URL to use the domain name or URL exposed by the Ingress controller. For example:

yamlCopy codeenv:

- name: REACT_APP_BACKEND_URL

value: "http://your-domain.com/api/tasks"

This way, your frontend application remains decoupled from specific backend service URLs, making it easier to switch between different environments or configurations in the future.

service.yaml

apiVersion: v1

kind: Service

metadata:

name: frontend

namespace: three-tier

spec:

ports:

- port: 3000

protocol: TCP

type: ClusterIP

selector:

role: frontend

kubectl get svc -n three-tier

Thorugh helm insatll ingress controller. Helm charts Helm is a package manager for Kubernetes that helps you manage Kubernetes applications. Helm uses charts, which are packages of pre-configured Kubernetes resources, to deploy applications to Kubernetes clusters.

Install ALB first, to insatll ALB need to download policy of Load Balancer amd create service account for Loadblancer

curl -O https://raw.githubusercontent.com/kubernetes-sigs/aws-load-balancer-controller/v2.5.4/docs/install/iam_policy.json

aws iam create-policy --policy-name AWSLoadBalancerControllerIAMPolicy --policy-document file://iam_policy.json

eksctl utils associate-iam-oidc-provider --region=ca-central-1 --cluster=three-tier-cluster --approve

eksctl create iamserviceaccount --cluster=three-tier-cluster --namespace=kube-system --name=aws-load-balancer-controller --role-name AmazonEKSLoadBalancerControllerRoleforthreetier --attach-policy-arn=arn:aws:iam::654654392783:policy/AWSLoadBalancerControllerIAMPolicyforthreetierr --approve --region=ca-central-1

## change account no and region according to your account

it will create policy in AWS

it will create iam Role

Deploy AWS LoadBlancer Controller

create ALB and associate it to Kubernetes cluster.

sudo snap install helm --classic

helm repo add eks https://aws.github.io/eks-charts

helm repo update eks

helm repo list

helm install aws-load-balancer-controller eks/aws-load-balancer-controller -n kube-system --set clusterName=three-tier-cluster --set serviceAccount.create=false --set serviceAccount.name=aws-load-balancer-controller

kubectl get deployment -n kube-system aws-load-balancer-controller

kubectl apply -f full_stack_lb.yaml

If you're leveraging existing deployment files from GitHub and integrating Helm for Kubernetes orchestration, Helm won't inherently install an Application Load Balancer (ALB) for you. However, you can create Helm charts that include ALB configurations alongside your existing deployment files.

To incorporate an ALB deployment into your Helm chart:

Identify or create a Helm chart for your application.

Add a new YAML file (e.g.,

alb-deployment.yaml) in thetemplates/directory of your Helm chart.In this YAML file, define Kubernetes resources for the ALB, such as a

Serviceof typeLoadBalancerand anIngressresource with appropriate annotations for ALB integration.Ensure to replace placeholder values in the YAML file with actual values from your existing deployment files.

When deploying or upgrading your Helm chart, Helm will include the ALB resources alongside your application components.

it will create 2 because in values.yaml the no. of replicas is 2.

Ingress Controller.

If you want to access your application from outside your Kubernetes cluster, you will need to expose your services to the external world. In such cases, you can use Kubernetes Ingress to route external traffic to your services.

Here's a general outline of what you need to do:

Deploy an Ingress controller in your Kubernetes cluster. This controller is responsible for managing incoming traffic and routing it to the appropriate services.

Define an Ingress resource that specifies how traffic should be routed. This includes specifying the hostnames, paths, and backend services.

Configure DNS to point your domain name to the IP address of your Ingress controller. This step is necessary if you want to use a custom domain name to access your application.

Once the Ingress controller and resource are configured, external traffic will be routed to your services based on the rules defined in the Ingress resource.

ALB is up and running.

Here are the steps to purchase a domain name on Route 53, create a subdomain (chaallenge.teamabc.net), and add a CNAME record pointing to a load balancer DNS name:

Purchase a Domain on Route 53:

Go to the AWS Management Console.

Navigate to Route 53.

Click on "Registered domains" and then "Register domain."

Follow the on-screen instructions to search for and purchase your desired domain.

Create a Subdomain and CNAME Record:

After purchasing the domain, go to the Route 53 dashboard.

In the navigation pane, select "Hosted zones."

Choose the hosted zone for your domain (e.g., teamabc.net).

Click on "Create Record."

For "Record name," enter "chaallenge".

Select "CNAME" as the record type.

In the "Value/Route traffic to" field, enter the load balancer DNS name (e.g., k8s-workshop-mainlb-7f430e3450-1932839287.c..).

Set the TTL (Time to Live) based on your preference (e.g., 300 seconds).

Save the record.

This creates a CNAME record for the subdomain "chaallenge.teamabc.net" that routes traffic to the specified load balancer DNS name.

Remember that DNS changes might not be immediately reflected, and it could take some time for the changes to propagate across the internet.

Frontend tier, backend tier and database tier are up and running.

go inside mongo db and use todo database to check the saved content that uploaded on frontend.

Delete EKS cluster.

As we wrap up our journey through the implementation of a three-tier architecture using Amazon EKS, we've witnessed the seamless integration of React.js for the frontend, Node.js for the backend, and MongoDB for the database. Leveraging the power of Kubernetes orchestration with Amazon EKS, we've containerized our applications, ensuring scalability, maintainability, and efficient resource utilization.

The deployment process, from creating Docker images to pushing them to Amazon ECR, has been streamlined with the AWS CLI. The integration of the Amazon Application Load Balancer (ALB) further enhances our application's accessibility.

By creating subdomains and configuring Route 53, we've added a personalized touch to our application's URL, making it accessible to users worldwide.

As you embark on your own projects, feel free to explore additional AWS services and functionalities to enhance your three-tier architecture further. Amazon EKS provides a solid foundation for containerized applications, and the possibilities for expansion and optimization are vast.

May your three-tier applications thrive in the cloud, and happy coding!

Feel free to ask me any doubts. Contact : pardeep17.kp@gmail.com